An Instant Sign Language Translate App

HandsTalk

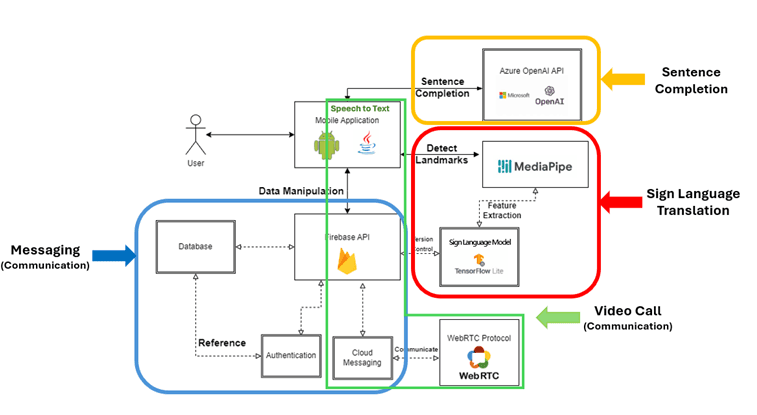

HandsTalk is a mobile app that uses advanced computer vision technology and generative AI to provide real-time translation from sign language to English. It utilizes built-in cameras without the need for specialized hardware, making it accessible to a wider audience.

Real-Time AI Translation

HandsTalk employs advanced computer vision technology to offer precise real-time translation from sign language to English. Generative AI is utilized to refine the detected sign language into coherent sentences.

Seamless Platform

We provide a seamless platform for sign language learners to improve their skills and for sign language users to communicate, with a user-friendly interface.

Data-driven Insights

A vast amount of sign language data was gathered from various institutions to train the sign language detection model using deep learning.